OpenAI, closed future

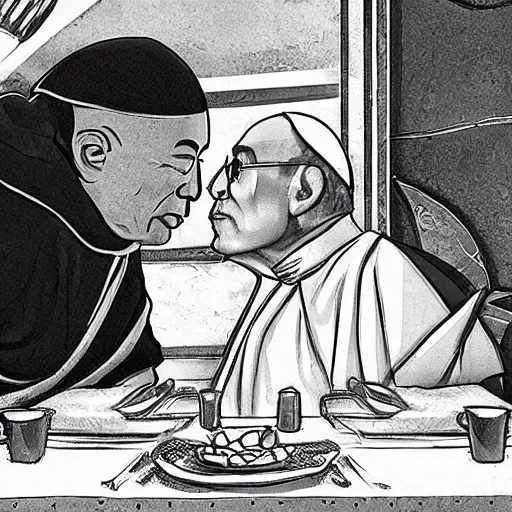

“Dalai Lama sucking Pope Francis’ tongue in a Parisian Bistro at sunrise.”

A quote that evokes a seemingly improbable event, well, I say this but if it was a child instead of the Pope it isn’t as unlikely. Yet, despite the likelihood of the event, a visual representation of this event might very well be readily available as soon OpenAI makes SORA generally available for the public. SORA is an AI model that can create an assortment of videos from a text prompt such as the one at the beginning of the article.

We live in scary times where armed conflicts are popping up left and right, mass-surveillance is almost ubiquitous, climate change is an ever present threat and nations are falling back to ultra-nationalistic tropes. Sometimes it feels like there isn’t much to be hopeful about, you’re just sitting in your corner of the world trying to make a living, and in comes Artificial Intelligence waltzing through the door, with the threat of completely revolutionizing the world and upending millions and millions of lives.

AI can be great, and in fact I use it a lot in my day-to-day job as a DevOps engineer, it’s a very powerful helper. However, I won’t lie, I see the potential it has to make many jobs disappear, and to make this point, let us go back to SORA. SORA is a remarkable technological achievement, one that seemingly has notions of physics, context and the different layers that compose a video, hence it appears to be far ahead of the competition with a lot of potential to be unlocked.

Like with most things in life, there are positives and negatives. There are some positive cases that I can imagine and have seen referenced, such as creating videos for product prototyping, marketing videos, lowering the entry level for careers like video editors, film conception, and surely there will be more cases that I haven’t seen mentioned yet.

Despite my feelings on the somewhat tepid positives that this AI model might bring, I see more dangers and potential risks than benefits. OpenAI says, rightfully so, that they are concerned about possible abuses of this technology, so they are working with teams and the community to make the product as safe as possible. Additionally, all contents generated by the SORA model will be identified with metadata in the C2PA standard, which I consider dangerous and have already discussed in another article.

Before pointing out some of the potential dangers I see in this product, I think it’s important to mention that the technology and knowledge used to create this product seem to be mostly known, in particular Diffusion models. This means it’s information that has been discovered and shared by the developer/scientific community in a somewhat transparent and open manner so that everyone can benefit from it. The technical article published by OpenAI demonstrates this fact, and also indicates that only companies in OpenAI’s position could create this model.

In the technical article, the team demonstrated how much computational power matters for this product. “Just” by using more computational resources, they can exponentially improve the quality of the final product. By 32xing the compute resources to produce a video, the quality of the output given the model was substantially more realistic and believable.

The negatives are, to me, much clearer. OpenAI is able to utilize these computational resources largely due to their partnership with Microsoft, a privilege that other companies or startups obviously do not have. The code for this and all the other models are not OpenSource, so the larger developer community can’t make sure that the users aren’t being taken advantage of. Furthermore, as is constantly demonstrated in the world of tech, it is impossible to create something without security flaws, and in this case, what will happen when someone exploits this model or improves it and makes it more accessible to the general public so that anyone with a computer can generate the video they want?

I am very afraid of someone creating pornographic videos about someone they dislike, or combining this technology with other technologies like Deepfake and voice emulation that are becoming increasingly realistic, to create, for example, a video of Donald Trump declaring war on China.

The ability to create content that allows for the exponential growth of incredibly realistic misinformation is very scary to me. Even experts in the field have been surprised by the advances made in the field of AI in recent years, and our governments do not have the speed or capacity to regulate this rapid advancement to make it at least ethical, let alone to prevent the possible repercussions of these advances.New jobs will be created, many will be irrevocably altered in negative ways, misinformation will grow and it will become increasingly harder to tell what’s true and what’s not. The world is changing

Personally, I have no answer for this subject, and I have not seen any sufficiently satisfactory answer from the community yet, but I see my fear shared by many professionals. I believe that as a society, we have to consider to what extent technological advancement and the way it is achieved are necessary or moral, and until we find consensus on this topic, we will suffer greatly and in ways never seen before. Skynet won’t destroy humanity, but an irresponsible use of AI certainly will.